Question authoring

Margaret Fleck, University of Illinois

|

Question authoring

|

Large, frequently-run courses require high-quality randomization of exams. This can discourage both cheating (esp. for online exams with limited proctoring) and blind memorization. Online platforms and generators for hardcopy exams can easily do two types of randomization: picking a variant for each individual question and randomizing the order in which questions are delivered. The hard tasks are producing a sufficient range of variants for each problem and making the questions available across different delivery modes.

Our campus has several good ways to deliver randomized exams, including

The advantages and disadvantages have changed over time. Online platforms appear, disappear, and evolve. Instructors have only limited control over where their tests can be given (e.g. testing center vs. classroom) in any particular term. Recently, we have had the experience of being thrown online in the middle of a term. Therefore, it is important for a course to quickly be able to switch delivery method.

My solution to this is to move our latex problem collection to a simple structured storage format, based on YAML. This format supports latex equations and included pictures. It includes details required by all of the platforms (e.g. which answer is correct) and also some specifics required by certain delivery methods (e.g. how many checkbox answers to put onto a single line of hardcopy). Model solutions are included for platforms that support them. Problems are classified by type (e.g. induction proof) so that response templates (e.g. induction outline) can be generated. From this format, we can render versions of the problems in latex, moodle's upload format, and (thanks to Ben Cosman) PrairieLearn's format.

Our problems are organized in groups, with a separate specification as to how the groups should be organized hierarchically for the online platofrm. This organization defines the sets from which problems are randomly drawn during exam generation. We also provide "contact sheets" that display all problems of a type, manually organized to make it easy for an instructor to browse the collection and understand where additional problems could be added.

We can divide the variant-generation problem into two tasks:

Most of my work has involved Discrete Structures, for which new structural variants can be hard to produce. It's important that variants test the intended skill, remain at the intended level of difficulty, and do not become excessively long and/or complex. Long, complex problems increase the time required to do each exam and impose an unfair burden on students whose first language is not English. It's easy to produce many structural variants for problems like determining whether a graph (shown as a picture) is transitive, but we have only 30 very easy induction proofs for the first time students are asked to write one.

Many of our problems include cosmetic details, such as the name of a function whose running time is being analyzed or the type of objects being chosen in a counting problem. These details make the problems seem more interesting. And they are sometimes (e.g. a function name) essential to writing the problem. But they can be used as keywords to memorize the answers to problems without understanding them, to look problems up on the internet (e.g. using a concealed device), or to give hints to a friend taking the exam at a later time.

It is frequently recommended that cosmetic values be generated randomly for each student at test time. However, it is just as effective to standardize the values across an offering of the exam. If a whole group of similar counting problems features (say) spiders this term, "spider" becomes an ineffective keyword, especially if the questions involved "ants" the previous term. This approach allows instructors to impose their own personal preferences on the cosmetics and it facilitates discussion of questions after the exam has finished. We make these cosmetic choices during the process of converting the YAML source to latex or to the upload format for an online system.

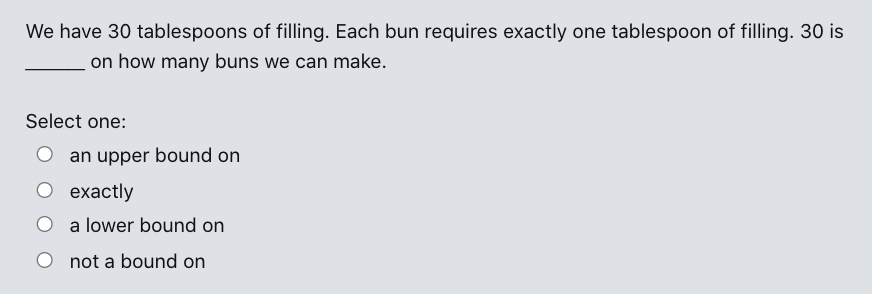

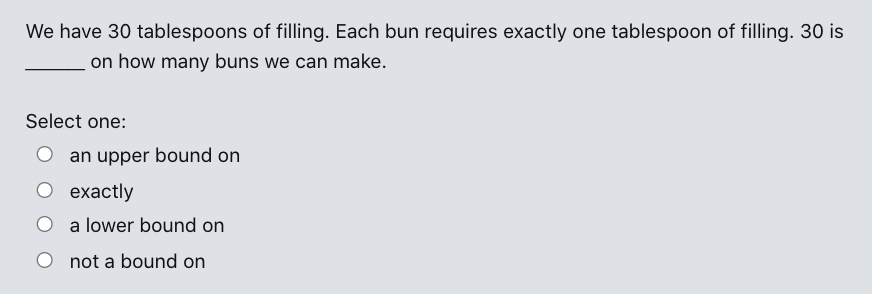

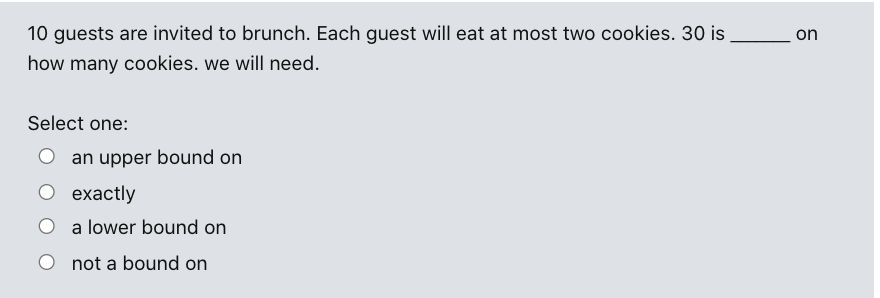

What makes this problem fun and interesting is that many problems require a filler of a specific type and there may be dependencies between fillers of two slots. For example, consider this problem, which tests whether students understand that bounds are not always tight.

If we want to substitute another word for "cookies," it needs to be something edible. It also must be plausible that a guest might naturally eat two or more. Either that or we have to also manipulate the numbers involved. Word problems frequently involve objects that may need to be edible, pourable, easy to position on a shelf, etc. People have certain types of names, different from the names we normally give to functions in a programming language. Collective nouns have to match the objects in the collection, e.g. flowers come in bunches, people belong to teams or committees. My current substitution engine uses a small selection of words and deals with some of the simpler linguistic issues, e.g. easy cases of choosing a pronoun and forming plurals. But there is clearly much scope for futher work.

Because we are using these questions in exams, it's very important that substitutions be reasonable and not confuse or distract the student. So this is not a problem that can be solved by simply dropping in a state-of-the art neural language generator, because these are still prone to occasional very strange errors. Instructors need strong control over authoring, even when substitutions are partly randomized.