Artificial Intelligence

(CS 440)

Margaret Fleck

University of Illinois

|

Artificial Intelligence

|

|

I have taught Artificial Intelligence six times: at Harvey Mudd College in Fall 1998 and Spring 2000, and then at the University of Illinois in Fall 2018, 2019, 2020, and 2021. (Mark Hasegawa-Johnson teaches it in the spring.) At the University of Illinois, this course serves students with a wide range of experience. It can be taken directly after our data structures course. But it is also frequently taken by students later in our program and master's students. It is intended as an accessible survey of the field and serves about 350 students each term. We make extensive use of canned online materials and automated grading, due to our size and recent pandemic restrictions.

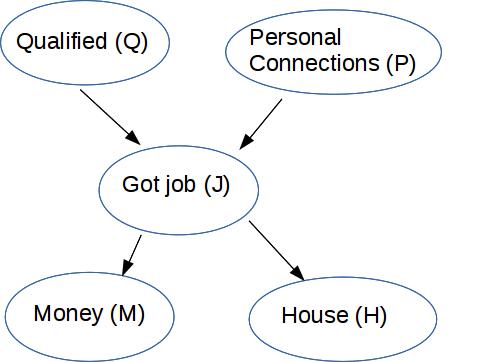

The dominant textbook for this topic is by Russell and Norvig. However, it is much too long for our typical student and has an excessive focus on logic-based methods. I have moved to including readings from other sources (notably Jurafsky and Martin) and have constructed a set of lecture notes ( canned pdf, live site for Fall 2021) that are over 200 pages long and mostly freestanding. These place greater emphasis on statistical and neural methods, and interleave techniques with brief introductions to the main application areas. I also provide pointers to recent work that students are likely to find interesting and accessible. Finally, I try to provide clear high-level analysis of the range of approaches available, along with their strengths and weaknesses.

Because of its size, the course was forced entirely online by the pandemic. So I have a full set of lecture videos available on the web.

This course is centered around its programming assignments. When I first took on the course, these were written in a student's choice of programming language, which meant they had to be written from scratch. Grading was based on reports which might or might not accurately reflect what the code actually did. And the assignments were done in groups, largely to reduce the work required to grade them.

I made several improvements to the assignments, most of which have been adopted by the spring offerings of the course. The original series of four assignments was split into seven small assignemnts. These include a wider range of topics, notably robot motion planning, HMM part of speech tagging, and using Pytorch to implement a neural net classifier. Students are now required to use python and are provided with supporting code to handle tasks (e.g. graphical display) that aren't the main focus of the assignment. Grading is done automatically on Gradescope.

An interesting challenge for assignments at this level is giving students freedom to design their code, while still accurately assessing whether their implementation is correct. Task performance is a surprisingly poor measure of correctness. Incorrect algorithms can often be tweaked and tuned to outperform straightforward implementations of the intended method. Sometimes we specifically want them to build less powerful algorithms (e.g. a two-layer neural net) so they can see what they can and cannot do. Therefore, we supplement task performance with testing their submissions on synthetic examples and synthetic datasets designed to probe for common design flaws, e.g. replacing backtracing with greedy approaches in implementing an HMM part of speech tagger.

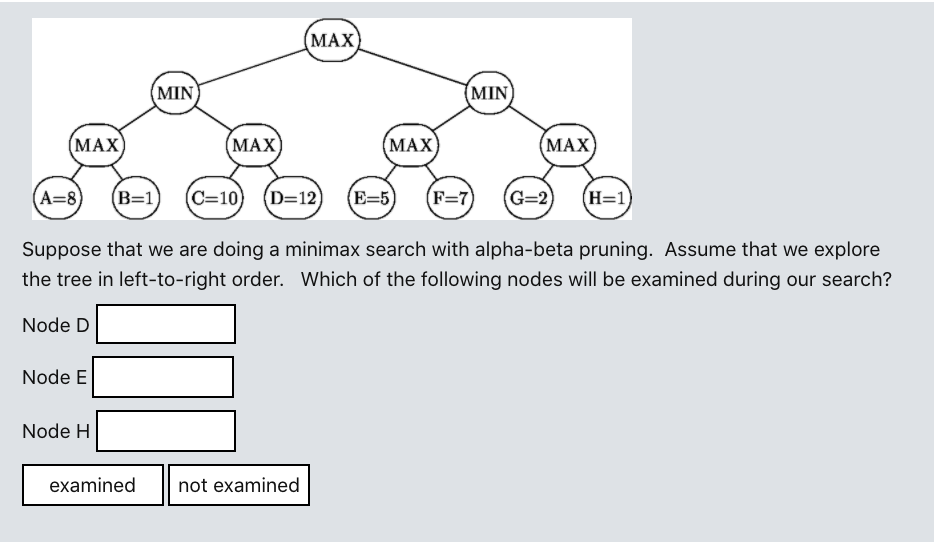

The exams and quizzes for this course have moved online. My skills lists can be seen here . Questions are randomly drawn from a (still growing) pool of 158 multiple choice questions and 87 open answer questions. The open-answer questions include model solutions, for the benefit of both the students and the TAs grading them. Here is a sample question that was adapted from open-answer to autograded using moodle's drag-and-drop question type:

Here are the web sites for several previous offerings: